If you’d like to jump straight to the code, please visit my Github-Repo :

https://github.com/kr1210/Flutter-Real-Time-Image-Classification

Making a smartphone app from scratch might seem a daunting task to most newbies and maybe even seasoned programmers.Enter Flutter.

As One of the promising, albeit untested technologies unveiled by Google for android app development, Flutter aims to make smartphone app development easier than ever. It is even rumored that flutter will soon be the go-to name in mobile app development.Creating UIs is a breeze and the developer friendly coding style makes the icing on the cake.

Working as an AI engineer ,one of the most common problems I and my team mates encounter is the matter of deployment of our models. How do you prototype your deep learning models in a quick,yet elegant way? The answer is carried around in our very pockets. Smartphones. That’s right. That overpowered piece of hardware on which you are possibly reading this article at this very moment is any developer’s dream come true. Combine the ubiquitous nature of smartphones and the versatile technology that is machine learning and you’re looking at a vast potential of innovation right there.

Now, I work at a place where people are often expected to roll up their sleeves and get the job done rather than wait for help. So, at one such instance, the matter of deployment loomed ahead of me and I had to choose between the daunting world of native android development and an exciting, yet uncharted new tech called Flutter. I chose the latter. At the time of writing this article, not every aspect of flutter is super stable, but it is certain that all of those problems shall disappear. In this article, I shall describe the important aspects of integrating deep learning models into an android app made with flutter. While I am no experienced android nor flutter developer, I was able to get a base version working in about a week. That’s how easy it is.

I assume the reader has a base understanding of layouts in flutter and a basic understanding of the programming language used,which is Dart. If you are unfamiliar with what I just mentioned a few beginner posts will get you on your way. While the methods and code mentioned in this project is not exactly what I would call optimized, it can serve as a starting point or a base for your own projects. So let’s dive in to it.

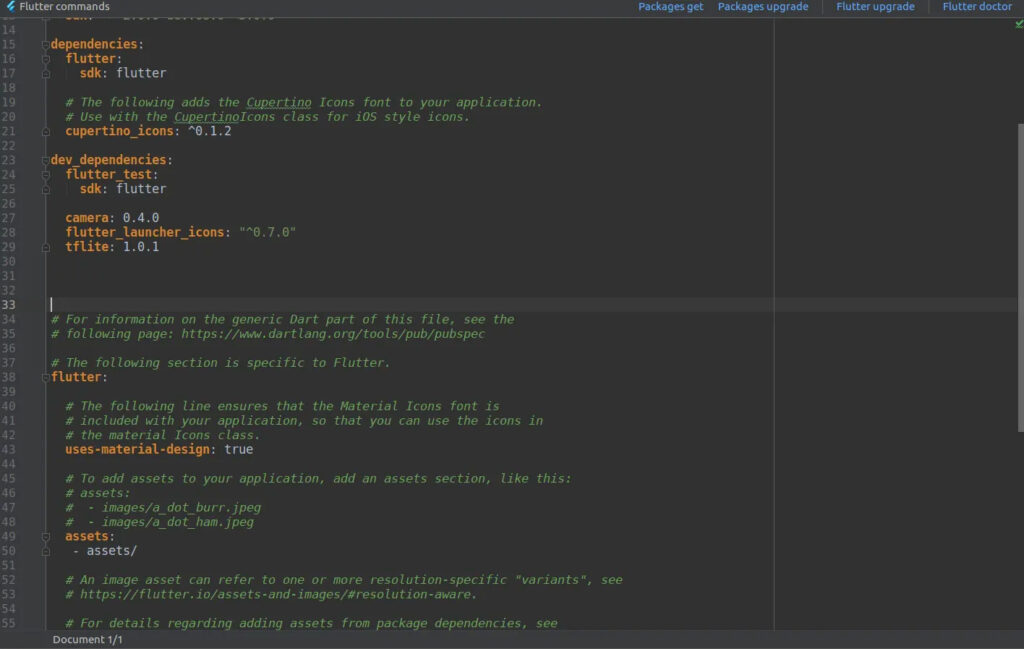

We will be using the TFlite plugin to integrate our neural network with the app. In order to use it, We first need to specify it as a dependency and also specify our model file’s presence in the assets folder. There are three steps to be followed for this:

- Place the model file(in .tflite format) in the

assetsfolder along with the labels.txt file which contains the name of the classes used. - Specify the file’s presence in the

assetsfolder so that the compiler knows to use it and also specify the usage of the plugin. - In

android/app/build.gradle, add the following setting inandroidblock.

aaptOptions {

noCompress 'tflite'

noCompress 'lite'

}So let’s open up our pubspec.yaml file.

Here, you can see that I’ve specified the tflite: 1.0.1 as a dev_dependency. Now it is ready to be imported and used with our app. It’s that simple (Not quite). When flutter runs the packages get command, the files required to run this plugin will be downloaded without any further action from our side.

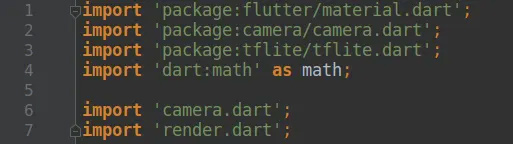

Now let’s open up our home.dart file, which is the page which has the ignite button labeled “start classification”.

As you can see, I’ve imported the tfliteplugin on line 3 in the home.dart page, similar to how I would import library files in other standard programming languages.

The Tflite plugin comes bundled with a function called loadModel() which is used to specify the name of the model to be loaded and the labels.txt file, which contains the name of the classes used.

For representation purposes, I’ve wrapped up this process in a function of my own, which is also called loadmodel().

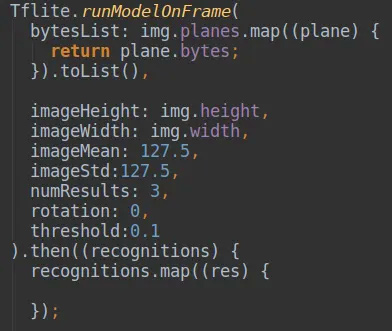

Now let’s move on to the camera.dart file, where the actual inference takes place. Here, We use the function runModelOnFrame(), which takes the frame from the camera stream and takes it as input to the model. This function requires a list of arguments such as the frame, its height, width, the number of results to be displayed and so on.

The output is received in the format specified by the plugin’s ReadMe file found on Flutter Pub.

{

index: 0,

label: "person",

confidence: 0.629

}

This output is received by the recognitions variable.

The output received from the model is imposed on the ImageStream from the camera on the render.dart page. In order to do this, the results obtained from the model is passed into the render.dart page as a list named, well, results. These results are then displayed along with the confidence percentage on the screen.

All the code and project files are available in my GitHub : https://github.com/kr1210/Flutter-Real-Time-Image-Classification